#Code Similarity API

Explore tagged Tumblr posts

Text

The modder argument is a fallacy.

We've all heard the argument, "a modder did it in a day, why does Mojang take a year?"

Hi, in case you don't know me, I'm a Minecraft modder. I'm the lead developer for the Sweet Berry Collective, a small modding team focused on quality mods.

I've been working on a mod, Wandering Wizardry, for about a year now, and I only have the amount of new content equivalent to 1/3 of an update.

Quality content takes time.

Anyone who does anything creative will agree with me. You need to make the code, the art, the models, all of which takes time.

One of the biggest bottlenecks in anything creative is the flow of ideas. If you have a lot of conflicting ideas you throw together super quickly, they'll all clash with each other, and nothing will feel coherent.

If you instead try to come up with ideas that fit with other parts of the content, you'll quickly run out and get stuck on what to add.

Modders don't need to follow Mojang's standards.

Mojang has a lot of standards on the type of content that's allowed to be in the game. Modders don't need to follow these.

A modder can implement a small feature in 5 minutes disregarding the rest of the game and how it fits in with that.

Mojang has to make sure it works on both Java and Bedrock, make sure it fits with other similar features, make sure it doesn't break progression, and listen to the whole community on that feature.

Mojang can't just buy out mods.

Almost every mod depends on external code that Mojang doesn't have the right to use. Forge, Fabric API, and Quilt Standard Libraries, all are unusable in base Minecraft, as well as the dozens of community maintained libraries for mods.

If Mojang were to buy a mod to implement it in the game, they'd need to partially or fully reimplement it to be compatible with the rest of the codebase.

Mojang does have tendencies of *hiring* modders, but that's different than outright buying mods.

Conclusion

Stop weaponizing us against Mojang. I can speak for almost the whole modding community when I say we don't like it.

Please reblog so more people can see this, and to put an end to the modder argument.

#minecraft#minecraft modding#minecraft mods#moddedminecraft#modded minecraft#mob vote#minecraft mob vote#minecraft live#minecraft live 2023#content creation#programming#java#c++#minecraft bedrock#minecraft community#minecraft modding community#forge#fabric#quilt#curseforge#modrinth

1K notes

·

View notes

Text

“Cooking up comfort”

mpreg!Choso x you

next part here<3

Summary: Neither you or Choso imagined that this could ever be possible, at first it was just a thought that crossed your mind. Since Choso was a half curse and had connection with the sorcery world. Would it be too crazy to find a way for him to get pregnant? Well, after doing an extensive research for a couple of months, and ten weeks later, Choso was expecting your child.

Even though it resulted possible for him to get pregnant, it wasn’t something common, a man getting pregnant was still something rare even though a lot of strange things were normal in this sorcery world. It was truly a blessing for both of you, it had its risks and complications, but he really wanted this, to form a family with you, and you would be there for him in every step of the way.

or

Where you cook lunch for your pregnant boyfriend and he gets too emotional (and nauseous)

contents: Mpreg Choso x female reader, fluff and slight angst, emotional hurt/comfort, slice of life, Unconventional Pregnancy, Alternate Universe - Canon Divergence

Notes: Long story short, I was using Janitor AI, my API code was about to expire and I was bored and came up with this JSKSJS It wasn’t exactly like this, this is more of an inspired fic.

I mention this briefly on the fic, but basically they made their research until they found a doctor who could help them. The doctor was also a sorcerer, of course average people didn’t knew this, but other sorcerers did.

the doctor’s cursed technique was similar to Mahito’s technique, in the way he could modify the bodies of his patients without having to necessarily use a scalpel. He temporarily modified Choso’s reproductive system, giving him a female reproductive system until he conceived and his baby was born.

For you to be able to impregnate Choso, the doctor made an incantation for you, so first he modified your reproductive system turning it into a male one, but it was temporary, like Choso’s procedure but with a slight difference. With an incantation given to you by the doctor, you were able to change your reproductive system from male to female and viceversa, you couldn’t do it too often as you had to wait a few hours because of how energy consuming this was, but aside from that, you could modify it as you pleased. Choso’s procedure wasn’t like this, because to have a baby he needed a stable reproductive system that could be fertile, that’s why he had to have a female one during his whole pregnancy until the baby is born.

𓈒 ݁ ₊ ݁

Choso remembers well how he felt that day, when all your efforts and the procedures you both had to get through finally paid off. He felt shock, excitement, fear, extreme happiness, truly an adrenaline mix of emotions, after all, it’s not every day you’re a man who gets told that he is pregnant.

He was beyond excited to start a little family with you, the love of his life. He was grateful to have you by his side, supporting and loving him so sincerely, it was a genuine kind of love. He was totally convinced that you two were soulmates, sometimes it made you giggle by the way he explained so passionately to you that hypothesis.

It’s been already ten weeks since you two received the good news, but it hasn’t been exactly easy for him. The doctor explained this beforehand, so you two would know the risks and complications that were involved in Choso’s unique condition. Since he had a male body with a now female reproductive system, his body had to adapt and prepare for it, and now that he was finally pregnant, some symptoms were significantly stronger for him than they would be in an average pregnancy.

On the good side, since he had his blood manipulation technique, he could control things like his blood pressure, which was a really great advantage for him. On the other side, things like mood swings, cravings (and food aversions), and morning sickness were just a pain in the ass.

Choso couldn’t help but feel guilty that you had to keep up with him, you were just so kind to him it made him want to cry. Right now, he was reading a knitting and crocheting magazine next to you on the sofa, he enjoyed creating yarn pieces for his loved ones, but it mainly helped him to feel more human in a way, it had significantly helped with his existencial crisis as a half-curse, so it was a great hobby for him.

Focused on reading, he had a small frown on his face, which you thought it was adorable because he didn’t even noticed when he did it. You gently brushed the wrinkles between his eyebrows with your thumb, getting Choso’s attention and making him smile awkwardly when he noticed he was frowning unconsciously.

“I was doing it again.” He chuckled, now gently holding your hand that was caressing his frown. “I found an interesting pattern that I can do with a thin yarn.” He showed you on the magazine. “I think it would be perfect for the baby, because I need a special yarn for them so it doesn’t hurt their skin.” He explained with his usual calmed demeanor, and the idea of him crocheting things for the baby made your heart melt.

You took a look at the magazine, giggling at the images of tiny gloves and baby hats. “Yeah, our baby will look so cute with the tiny crochet pieces you’ll do for them, and it will also keep them warm, it’s perfect.”

You looked at the clock on the wall and noticed that it was time for lunch. Ever since Choso’s cravings started, you’ve improved your cooking skills so much that now cooking him specific plates he craved was like a personal challenge for you.

You stood up from the couch and stretched your arms above your head, Choso’s eyes subtly looked at you and the way your t-shirt lifted up just enough to see a strip of skin of your lower abdomen, making him blush slightly and turn to look down, back at his magazine.

“I’ll cook lunch for us.” You announced and Choso looked at you again, with a special shine in his eyes. “Is there something specific that you want?”

Choso thought about it for a moment, he loved the way you cook and having lunch next to you was one of his favorite parts of the day “What about avocado pasta? it’s been a while since I’ve eaten avocado.” He suggested.

You didn’t have a single avocado in the house. But it wouldn’t stop you.

“Sounds really good, avocado pasta it is.” You smiled at him, turning around to walk towards the main entrance. “I’ll be right back, I’ll go get some avocados!”

He couldn’t say a word before you were already outside. Half an hour later, you came back home with a bag of three avocados and also some cream, but you noticed a certain smell in the air, you walked into the kitchen to see Choso stirring a pot, boiling the noodles, he once again looked at you with that awkward smile of his.

“But Choso, I was going to cook lunch for us.” You said, leaving the avocados in the kitchen counter to walk towards him.

“I know, I know! I just wanted to do something while you were at the store. I want to help you as much as I can while I’m still not useless, you know?” He answered with a slight pout, he appreciated that you wanted to do things for him, but sometimes he felt like a burden, so he wanted to keep helping you now that his baby bump wasn’t big or heavy.

You caressed his strong arm, resting your cheek against his skin, “You won’t be useless, Choso. You’re carrying our baby and it takes lots of effort.” You said reassuringly. “I just want you to be relaxed, alright?” You explained to Choso your desire for him to experience a peaceful and relaxed pregnancy as much as he could, knowing that his journey wouldn’t be as easy as a regular pregnancy.

He put aside the wooden spoon he was using to stir the noodles and sighed, his gaze fixed on the bubbling water. Your arms moved to wrap around his waist, and your hands found their way to caress the small bump.

“Thank you for boiling the noodles, though.” You murmured, your cheek resting against his back. “But please don’t worry about cooking or washing dishes, let me take care of it so you and the baby can crochet something or watch a movie on TV.” A smile appeared on your face when you felt him chuckle.

He turned around to cup your face with his big hands, leaning in to leave a kiss on your forehead, “Alright, I can’t argue with you.” He smiled softly, looking at you with those tired eyes. “I’ll go to the living room, but please tell me if you need me to help.”

You nodded, standing on your tippy toes to leave a kiss on his lips.

𓈒 ݁ ₊

Choso understood completely that you wanted to do things for him, to make his day a little bit easier, he would do the same thing in your place, or he might be even worse. But again, he felt guilty.

It was most likely a product of his condition and hormones, because he just felt really bad that you were doing so much for him every day, things he could perfectly do himself. With this weight on his heart, he looked at you from the sofa, the food was ready and you placed the two plates on the table.

“Avocado pasta for your majesty.” You said playfully, taking your seat at the table.

Choso chuckled at your words, taking a seat next to you. He picked his fork and look down at the vibrant plate, the noodles were saturated with this creamy avocado sauce that you prepared just for him, and without wasting any more time, he pinched a few short noddles and started to eat.

But as he chewed, he started to think about how weird it felt to eat hot mashed avocado, the taste of the ripe fruit almost reminding him of a trash-bag with rotten vegetables, that smell was stuck on his nose and the slimy texture didn’t help. He started to chew more slowly, lowering the fork and setting it down with a soft clink against the plate.

While you were eating, you noticed Choso’s change of demeanor, and an expression on his face that seemed like he was about to cry and throw up, it worried you. “Choso? Are you alright?” You asked carefully, with a hint of concern.

He looked at his almost untouched plate with a mix of guilt and frustration. He felt overwhelmed by his fluctuating emotions, and this sudden aversion to the flavor of avocado only added to his distress.

“I’m sorry, love. I just… I can’t handle the taste of avocado right now,” Choso replied, his voice filled with frustration. He quickly looked at you “It’s not your fault, I know you put effort into cooking for us. It’s just… this pregnancy is messing with my taste buds and it’s driving me crazy.”

He sighed, his eyes welling up with tears. “I hate feeling like this, like I’m being ungrateful for all the things you do for me,” he continued, his voice wavering. “But the hormones are making everything so intense and overwhelming. I just want to be normal again and enjoy the things I used to.”

You felt a wave of sadness wash over your heart, it was difficult to see him like this, knowing that his pregnancy state is amplifying even more his frustrating emotions, you wished you could take all his suffering away from him.

You moved his plate aside and got closer to him, protectively wrapping him into your arms, his cheek rested against your collarbone while he hid in the crook of your neck. “It’s alright, it’s alright…” You cooed really softly, caressing his hair and letting him cry as much as he needed.

Choso shook his head, “it’s not alright, you even had to go out to buy the avocados to cook what I asked you and now I can’t even eat it.” He looked up at you, his eyes big and filled with guilt, “I didn’t want to make you upset, I just really can’t stand the taste of it right now, b-but I can make up for it! I can do whatever you want, I can cook something for you, just ask and I’ll-“

Before he could start to hyperventilate further more, you cupped his face in your hands, making him look into your eyes with his own glossy ones. When you pressed your lips on his forehead so softly and tenderly, he could only pout because of how endearing it was, you were too good to him, he didn’t felt like he deserved it.

“Breathe, baby, just try to breathe deeply for a moment.” You instructed gently, helping Choso to feel more grounded.

Wiping some stray tears with your thumbs, you continued to talk to him. “I’m not upset, pretty thing, it’s just avocado and some noodles, I couldn’t possibly get mad at you for it.” You smiled at him, your soft giggles making him feel more at ease.

Even though that pet name made him blush slightly, he still had some lingering self-deprecating thoughts. He opened his mouth to keep talking, but you stopped him right away with a short kiss.

You continued, with the softest voice. “I know this has been a really difficult journey for you, baby. I know you wish you could be like before, but I want you to remember that it will all be worth it in the end.” You smiled softly and reassuringly at him, “Some months ago, we wouldn’t even think this could be possible, but now look at you! You’re ten weeks along and you’re doing it amazingly.”

He smiled softly, his crying starting to cease. He leaned his head back on your shoulder, finding comfort in your warmth. “I think I’ve never imagined myself being pregnant.” His voice tinged with a mix of vulnerability and gratitude. “Well, I never really pictured myself living a peaceful life… But now, I feel so glad that I get to experience it with you.”

Choso now sat straight, wiping the remaining tears from his cheeks and sniffling his nose a couple of times, “Thank you for keeping up with me, even when I’m difficult sometimes.” He reached out to cup your face with his hand, gently caressing your cheek with his thumb.

You nuzzled into his touch, “Your body is going through big changes, it’s natural that you feel overwhelmed. But you’re not alone, we’re a team.” You kissed his palm, your lips lingering for a moment before you gently pulled away.

Choso's eyes reflected gratitude as he let out a small sigh. "I appreciate that more than I can express."

Now that he was more calmed, you remembered that he still had to eat something, he needed to eat good for him and the baby.

"How about we distract ourselves for a bit? I'll cook something different, and you can join me in the kitchen. It might be a good way to take your mind off things."

His eyes brightened with interest. "Cook together?"

"Yeah," you chuckled. "We'll make it a You and Me cooking special. What do you think?"

Notes: I’m not completely satisfied so I’ll write more one-shots for this mpreg Choso au !!

likes and reblogs are greatly appreciated!! it motivates me a lot<3

#jujutsu kaisen#fanfic#jjk choso#jjk imagines#jjk mpreg#jjk x reader#jjk fanfic#choso imagine#choso one shot#choso#choso kamo#choso x reader#mpreg choso#mpreg fic

109 notes

·

View notes

Text

Just learnt how to turn HTML to Image

Friday 6th October 2023

Yeah just figured out how to turn HTML tags into an image you can download and save! The idea popped up because I came across a image generator from the user inputting the text and images and the generator would merge all of that into a .png file for you to save. So, I thought "mmmh I could do that?" (^^)b

・゚: *✧・゚:* and I did *:・゚✧*:・゚

Now I'll use this for future project ideas! Especially a similar project to those "Code Snippets to Image" generators I use to share code on my blog! I'll make a proper post of how I did because it took me forever from an API with limited conversions to an outdated tutorial with broken links 😖

art used @fraberry-stroobcake 🌷

⤷ ○ ♡ my shop ○ my twt ○ my youtube ○ pinned post ○ blog's navigation ♡ ○

#codeblr#coding#progblr#programming#studyblr#studying#computer science#tech#html css#html5 css3#code#programmer#comp sci#web design

82 notes

·

View notes

Text

How to Implement Royalty Payments in NFTs Using Smart Contracts

NFTs have revolutionized how creators monetize their digital work, with royalty payments being one of the most powerful features. Let's explore how to implement royalty mechanisms in your NFT smart contracts, ensuring creators continue to benefit from secondary sales - all without needing to write code yourself.

Understanding NFT Royalties

Royalties allow creators to earn a percentage of each secondary sale. Unlike traditional art, where artists rarely benefit from appreciation in their work's value, NFTs can automatically distribute royalties to creators whenever their digital assets change hands.

The beauty of NFT royalties is that once set up, they work automatically. When someone resells your NFT on a compatible marketplace, you receive your percentage without any manual intervention.

No-Code Solutions for Implementing NFT Royalties

1. Choose a Creator-Friendly NFT Platform

Several platforms now offer user-friendly interfaces for creating NFTs with royalty settings:

OpenSea: Allows setting royalties up to 10% through their simple creator dashboard

Rarible: Offers customizable royalty settings without coding

Foundation: Automatically includes a 10% royalty for creators

Mintable: Provides easy royalty configuration during the minting process

NFTPort: Offers API-based solutions with simpler implementation requirements

2. Setting Up Royalties Through Platform Interfaces

Most platforms follow a similar process:

Create an account and verify your identity

Navigate to the creation/minting section

Upload your digital asset

Fill in the metadata (title, description, etc.)

Look for a "Royalties" or "Secondary Sales" section

Enter your desired percentage (typically between 2.5% and 10%)

Complete the minting process

3. Understanding Platform-Specific Settings

Different platforms have unique approaches to royalty implementation:

OpenSea

Navigate to your collection settings

Look for "Creator Earnings"

Set your percentage and add recipient addresses

Save your settings

Rarible

During the minting process, you'll see a "Royalties" field

Enter your percentage (up to 50%, though 5-10% is standard)

You can add multiple recipients with different percentages

Foundation

Has a fixed 10% royalty that cannot be modified

Automatically sends royalties to the original creator's wallet

4. Use NFT Creator Tools

Several tools help creators implement royalties without coding:

NFT Creator Pro: Offers drag-and-drop functionality with royalty settings

Manifold Studio: Provides customizable contracts without coding knowledge

Mintplex: Allows creators to establish royalties through simple forms

Bueno: Features a no-code NFT builder with royalty options

Important Considerations for Your Royalty Strategy

Marketplace Compatibility

Not all marketplaces honor royalty settings equally. Research which platforms respect creator royalties before deciding where to list your NFTs.

Reasonable Royalty Percentages

While you might be tempted to set high royalty percentages, market standards typically range from 5-10%. Setting royalties too high might discourage secondary sales altogether.

Payment Recipient Planning

Consider whether royalties should go to:

Your personal wallet

A business entity

Multiple creators (split royalties)

A community treasury or charity

Transparency with Collectors

Clearly communicate your royalty structure to potential buyers. Transparency builds trust in your project and helps buyers understand the long-term value proposition.

Navigating Royalty Enforcement Challenges

While the NFT industry initially embraced creator royalties, some marketplaces have made them optional. To maximize your royalty enforcement:

Choose supportive marketplaces: List primarily on platforms that enforce royalties

Engage with your community: Cultivate collectors who value supporting creators

Utilize blocklisting tools: Some solutions allow creators to block sales on platforms that don't honor royalties

Consider subscription models: Offer special benefits to collectors who purchase through royalty-honoring platforms

Tracking Your Royalty Payments

Without coding knowledge, you can still track your royalty income:

NFT Analytics platforms: Services like NFTScan and Moonstream provide royalty tracking

Wallet notification services: Set up alerts for incoming payments

Marketplace dashboards: Most platforms offer creator dashboards with earning statistics

Third-party accounting tools: Solutions like NFTax help track royalty income for tax purposes

Real-World Success Stories

Many successful NFT creators have implemented royalties without coding knowledge:

Digital artist Beeple receives royalties from secondary sales of his record-breaking NFT works

Photographer Isaac "Drift" Wright funds new creative projects through ongoing royalties

Music groups like Kings of Leon use NFT royalties to create sustainable revenue streams

Conclusion

Implementing royalty payments in NFTs doesn't require deep technical knowledge. By leveraging user-friendly platforms and tools, any creator can ensure they benefit from the appreciation of their digital assets over time.

As the NFT ecosystem evolves, staying informed about royalty standards and marketplace policies will help you maximize your passive income potential. With the right approach, you can create a sustainable revenue stream that rewards your creativity for years to come.

Remember that while no-code solutions make implementation easier, understanding the underlying principles of NFT royalties will help you make more strategic decisions for your creative business.

#game#mobile game development#multiplayer games#metaverse#blockchain#vr games#unity game development#nft#gaming

2 notes

·

View notes

Text

Vibecoding a production app

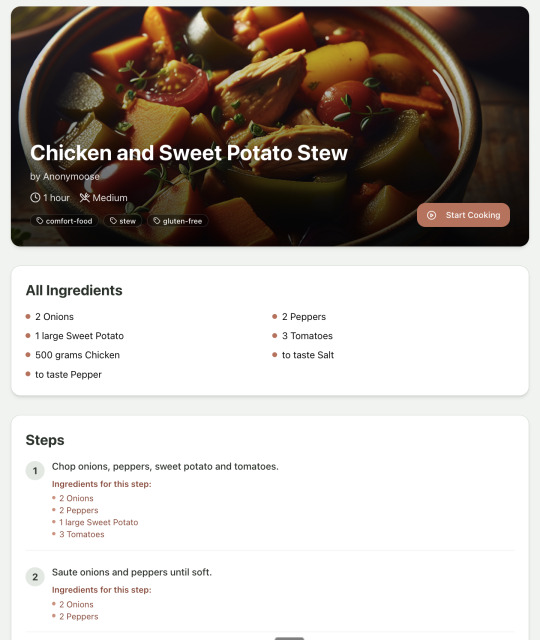

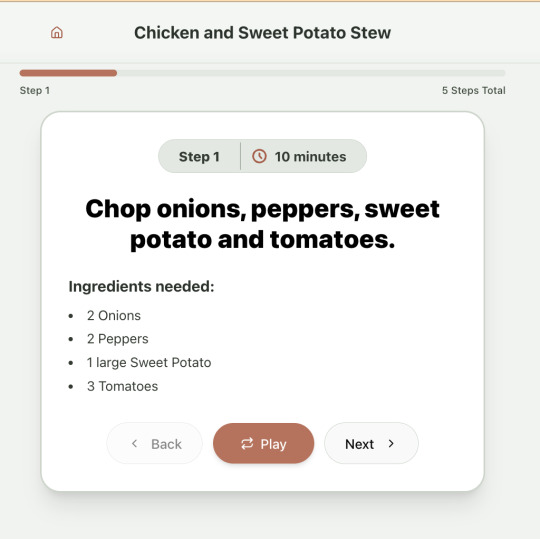

TL;DR I built and launched a recipe app with about 20 hours of work - recipeninja.ai

Background: I'm a startup founder turned investor. I taught myself (bad) PHP in 2000, and picked up Ruby on Rails in 2011. I'd guess 2015 was the last time I wrote a line of Ruby professionally. I've built small side projects over the years, but nothing with any significant usage. So it's fair to say I'm a little rusty, and I never really bothered to learn front end code or design.

In my day job at Y Combinator, I'm around founders who are building amazing stuff with AI every day and I kept hearing about the advances in tools like Lovable, Cursor and Windsurf. I love building stuff and I've always got a list of little apps I want to build if I had more free time.

About a month ago, I started playing with Lovable to build a word game based on Articulate (it's similar to Heads Up or Taboo). I got a working version, but I quickly ran into limitations - I found it very complicated to add a supabase backend, and it kept re-writing large parts of my app logic when I only wanted to make cosmetic changes. It felt like a toy - not ready to build real applications yet.

But I kept hearing great things about tools like Windsurf. A couple of weeks ago, I looked again at my list of app ideas to build and saw "Recipe App". I've wanted to build a hands-free recipe app for years. I love to cook, but the problem with most recipe websites is that they're optimized for SEO, not for humans. So you have pages and pages of descriptive crap to scroll through before you actually get to the recipe. I've used the recipe app Paprika to store my recipes in one place, but honestly it feels like it was built in 2009. The UI isn't great for actually cooking. My hands are covered in food and I don't really want to touch my phone or computer when I'm following a recipe.

So I set out to build what would become RecipeNinja.ai

For this project, I decided to use Windsurf. I wanted a Rails 8 API backend and React front-end app and Windsurf set this up for me in no time. Setting up homebrew on a new laptop, installing npm and making sure I'm on the right version of Ruby is always a pain. Windsurf did this for me step-by-step. I needed to set up SSH keys so I could push to GitHub and Heroku. Windsurf did this for me as well, in about 20% of the time it would have taken me to Google all of the relevant commands.

I was impressed that it started using the Rails conventions straight out of the box. For database migrations, it used the Rails command-line tool, which then generated the correct file names and used all the correct Rails conventions. I didn't prompt this specifically - it just knew how to do it. It one-shotted pretty complex changes across the React front end and Rails backend to work seamlessly together.

To start with, the main piece of functionality was to generate a complete step-by-step recipe from a simple input ("Lasagne"), generate an image of the finished dish, and then allow the user to progress through the recipe step-by-step with voice narration of each step. I used OpenAI for the LLM and ElevenLabs for voice. "Grandpa Spuds Oxley" gave it a friendly southern accent.

Recipe summary:

And the recipe step-by-step view:

I was pretty astonished that Windsurf managed to integrate both the OpenAI and Elevenlabs APIs without me doing very much at all. After we had a couple of problems with the open AI Ruby library, it quickly fell back to a raw ruby HTTP client implementation, but I honestly didn't care. As long as it worked, I didn't really mind if it used 20 lines of code or two lines of code. And Windsurf was pretty good about enforcing reasonable security practices. I wanted to call Elevenlabs directly from the front end while I was still prototyping stuff, and Windsurf objected very strongly, telling me that I was risking exposing my private API credentials to the Internet. I promised I'd fix it before I deployed to production and it finally acquiesced.

I decided I wanted to add "Advanced Import" functionality where you could take a picture of a recipe (this could be a handwritten note or a picture from a favourite a recipe book) and RecipeNinja would import the recipe. This took a handful of minutes.

Pretty quickly, a pattern emerged; I would prompt for a feature. It would read relevant files and make changes for two or three minutes, and then I would test the backend and front end together. I could quickly see from the JavaScript console or the Rails logs if there was an error, and I would just copy paste this error straight back into Windsurf with little or no explanation. 80% of the time, Windsurf would correct the mistake and the site would work. Pretty quickly, I didn't even look at the code it generated at all. I just accepted all changes and then checked if it worked in the front end.

After a couple of hours of work on the recipe generation, I decided to add the concept of "Users" and include Google Auth as a login option. This would require extensive changes across the front end and backend - a database migration, a new model, new controller and entirely new UI. Windsurf one-shotted the code. It didn't actually work straight away because I had to configure Google Auth to add `localhost` as a valid origin domain, but Windsurf talked me through the changes I needed to make on the Google Auth website. I took a screenshot of the Google Auth config page and pasted it back into Windsurf and it caught an error I had made. I could login to my app immediately after I made this config change. Pretty mindblowing. You can now see who's created each recipe, keep a list of your own recipes, and toggle each recipe to public or private visibility. When I needed to set up Heroku to host my app online, Windsurf generated a bunch of terminal commands to configure my Heroku apps correctly. It went slightly off track at one point because it was using old Heroku APIs, so I pointed it to the Heroku docs page and it fixed it up correctly.

I always dreaded adding custom domains to my projects - I hate dealing with Registrars and configuring DNS to point at the right nameservers. But Windsurf told me how to configure my GoDaddy domain name DNS to work with Heroku, telling me exactly what buttons to press and what values to paste into the DNS config page. I pointed it at the Heroku docs again and Windsurf used the Heroku command line tool to add the "Custom Domain" add-ons I needed and fetch the right Heroku nameservers. I took a screenshot of the GoDaddy DNS settings and it confirmed it was right.

I can see very soon that tools like Cursor & Windsurf will integrate something like Browser Use so that an AI agent will do all this browser-based configuration work with zero user input.

I'm also impressed that Windsurf will sometimes start up a Rails server and use curl commands to check that an API is working correctly, or start my React project and load up a web preview and check the front end works. This functionality didn't always seem to work consistently, and so I fell back to testing it manually myself most of the time.

When I was happy with the code, it wrote git commits for me and pushed code to Heroku from the in-built command line terminal. Pretty cool!

I do have a few niggles still. Sometimes it's a little over-eager - it will make more changes than I want, without checking with me that I'm happy or the code works. For example, it might try to commit code and deploy to production, and I need to press "Stop" and actually test the app myself. When I asked it to add analytics, it went overboard and added 100 different analytics events in pretty insignificant places. When it got trigger-happy like this, I reverted the changes and gave it more precise commands to follow one by one.

The one thing I haven't got working yet is automated testing that's executed by the agent before it decides a task is complete; there's probably a way to do it with custom rules (I have spent zero time investigating this). It feels like I should be able to have an integration test suite that is run automatically after every code change, and then any test failures should be rectified automatically by the AI before it says it's finished.

Also, the AI should be able to tail my Rails logs to look for errors. It should spot things like database queries and automatically optimize my Active Record queries to make my app perform better. At the moment I'm copy-pasting in excerpts of the Rails logs, and then Windsurf quickly figures out that I've got an N+1 query problem and fixes it. Pretty cool.

Refactoring is also kind of painful. I've ended up with several files that are 700-900 lines long and contain duplicate functionality. For example, list recipes by tag and list recipes by user are basically the same.

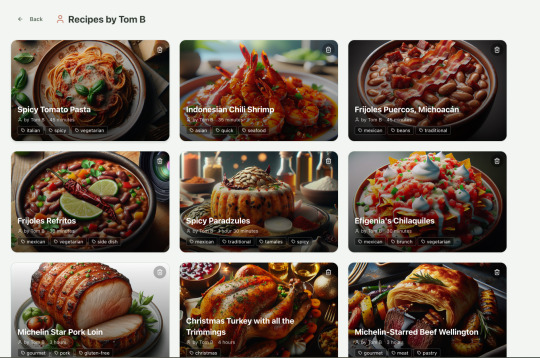

Recipes by user:

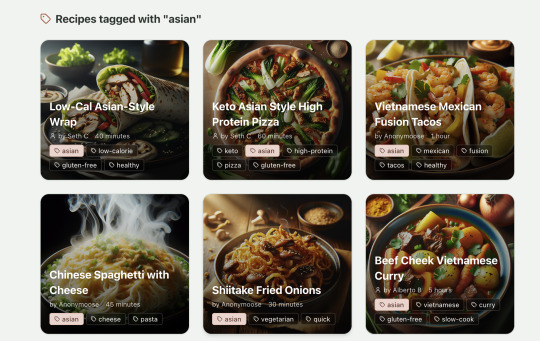

This should really be identical to list recipes by tag, but Windsurf has implemented them separately.

Recipes by tag:

If I ask Windsurf to refactor these two pages, it randomly changes stuff like renaming analytics events, rewriting user-facing alerts, and changing random little UX stuff, when I really want to keep the functionality exactly the same and only move duplicate code into shared modules. Instead, to successfully refactor, I had to ask Windsurf to list out ideas for refactoring, then prompt it specifically to refactor these things one by one, touching nothing else. That worked a little better, but it still wasn't perfect

Sometimes, adding minor functionality to the Rails API will often change the entire API response, rather just adding a couple of fields. Eg It will occasionally change Index Recipes to nest responses in an object { "recipes": [ ] }, versus just returning an array, which breaks the frontend. And then another minor change will revert it. This is where adding tests to identify and prevent these kinds of API changes would be really useful. When I ask Windsurf to fix these API changes, it will instead change the front end to accept the new API json format and also leave the old implementation in for "backwards compatibility". This ends up with a tangled mess of code that isn't really necessary. But I'm vibecoding so I didn't bother to fix it.

Then there was some changes that just didn't work at all. Trying to implement Posthog analytics in the front end seemed to break my entire app multiple times. I tried to add user voice commands ("Go to the next step"), but this conflicted with the eleven labs voice recordings. Having really good git discipline makes vibe coding much easier and less stressful. If something doesn't work after 10 minutes, I can just git reset head --hard. I've not lost very much time, and it frees me up to try more ambitious prompts to see what the AI can do. Less technical users who aren't familiar with git have lost months of work when the AI goes off on a vision quest and the inbuilt revert functionality doesn't work properly. It seems like adding more native support for version control could be a massive win for these AI coding tools.

Another complaint I've heard is that the AI coding tools don't write "production" code that can scale. So I decided to put this to the test by asking Windsurf for some tips on how to make the application more performant. It identified I was downloading 3 MB image files for each recipe, and suggested a Rails feature for adding lower resolution image variants automatically. Two minutes later, I had thumbnail and midsize variants that decrease the loading time of each page by 80%. Similarly, it identified inefficient N+1 active record queries and rewrote them to be more efficient. There are a ton more performance features that come built into Rails - caching would be the next thing I'd probably add if usage really ballooned.

Before going to production, I kept my promise to move my Elevenlabs API keys to the backend. Almost as an afterthought, I asked asked Windsurf to cache the voice responses so that I'd only make an Elevenlabs API call once for each recipe step; after that, the audio file was stored in S3 using Rails ActiveStorage and served without costing me more credits. Two minutes later, it was done. Awesome.

At the end of a vibecoding session, I'd write a list of 10 or 15 new ideas for functionality that I wanted to add the next time I came back to the project. In the past, these lists would've built up over time and never gotten done. Each task might've taken me five minutes to an hour to complete manually. With Windsurf, I was astonished how quickly I could work through these lists. Changes took one or two minutes each, and within 30 minutes I'd completed my entire to do list from the day before. It was astonishing how productive I felt. I can create the features faster than I can come up with ideas.

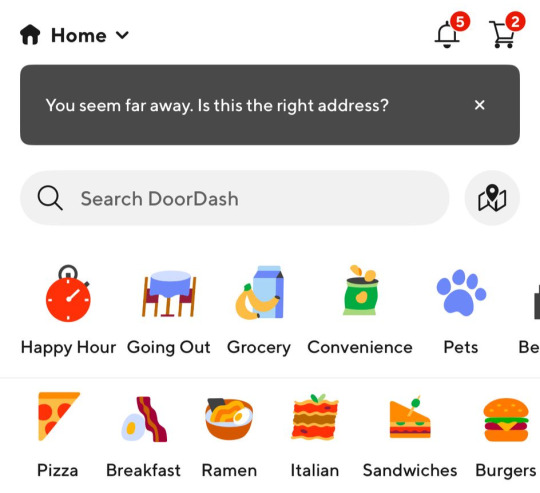

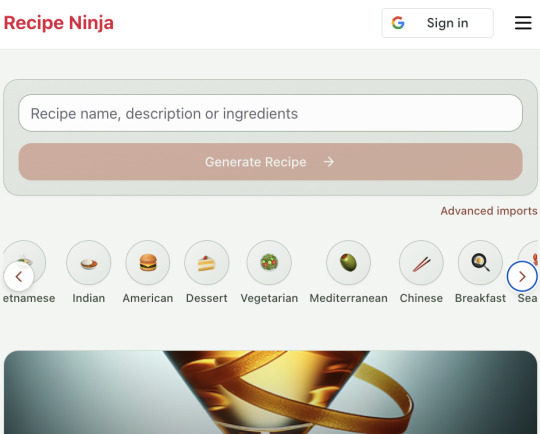

Before launching, I wanted to improve the design, so I took a quick look at a couple of recipe sites. They were much more visual than my site, and so I simply told Windsurf to make my design more visual, emphasizing photos of food. Its first try was great. I showed it to a couple of friends and they suggested I should add recipe categories - "Thai" or "Mexican" or "Pizza" for example. They showed me the DoorDash app, so I took a screenshot of it and pasted it into Windsurf. My prompt was "Give me a carousel of food icons that look like this". Again, this worked in one shot. I think my version actually looks better than Doordash 🤷♂️

Doordash:

My carousel:

I also saw I was getting a console error from missing Favicon. I always struggle to make Favicon for previous sites because I could never figure out where they were supposed to go or what file format they needed. I got OpenAI to generate me a little recipe ninja icon with a transparent background and I saved it into my project directory. I asked Windsurf what file format I need and it listed out nine different sizes and file formats. Seems annoying. I wondered if Windsurf could just do it all for me. It quickly wrote a series of Bash commands to create a temporary folder, resize the image and create the nine variants I needed. It put them into the right directory and then cleaned up the temporary directory. I laughed in amazement. I've never been good at bash scripting and I didn't know if it was even possible to do what I was asking via the command line. I guess it is possible.

After launching and posting on Twitter, a few hundred users visited the site and generated about 1000 recipes. I was pretty happy! Unfortunately, the next day I woke up and saw that I had a $700 OpenAI bill. Someone had been abusing the site and costing me a lot of OpenAI credits by creating a single recipe over and over again - "Pasta with Shallots and Pineapple". They did this 12,000 times. Obviously, I had not put any rate limiting in.

Still, I was determined not to write any code. I explained the problem and asked Windsurf to come up with solutions. Seconds later, I had 15 pretty good suggestions. I implemented several (but not all) of the ideas in about 10 minutes and the abuse stopped dead in its tracks. I won't tell you which ones I chose in case Mr Shallots and Pineapple is reading. The app's security is not perfect, but I'm pretty happy with it for the scale I'm at. If I continue to grow and get more abuse, I'll implement more robust measures.

Overall, I am astonished how productive Windsurf has made me in the last two weeks. I'm not a good designer or frontend developer, and I'm a very rusty rails dev. I got this project into production 5 to 10 times faster than it would've taken me manually, and the level of polish on the front end is much higher than I could've achieved on my own. Over and over again, I would ask for a change and be astonished at the speed and quality with which Windsurf implemented it. I just sat laughing as the computer wrote code.

The next thing I want to change is making the recipe generation process much more immediate and responsive. Right now, it takes about 20 seconds to generate a recipe and for a new user it feels like maybe the app just isn't doing anything.

Instead, I'm experimenting with using Websockets to show a streaming response as the recipe is created. This gives the user immediate feedback that something is happening. It would also make editing the recipe really fun - you could ask it to "add nuts" to the recipe, and see as the recipe dynamically updates 2-3 seconds later. You could also say "Increase the quantities to cook for 8 people" or "Change from imperial to metric measurements".

I have a basic implementation working, but there are still some rough edges. I might actually go and read the code this time to figure out what it's doing!

I also want to add a full voice agent interface so that you don't have to touch the screen at all. Halfway through cooking a recipe, you might ask "I don't have cilantro - what could I use instead?" or say "Set a timer for 30 minutes". That would be my dream recipe app!

Tools like Windsurf or Cursor aren't yet as useful for non-technical users - they're extremely powerful and there are still too many ways to blow your own face off. I have a fairly good idea of the architecture that I want Windsurf to implement, and I could quickly spot when it was going off track or choosing a solution that was inappropriately complicated for the feature I was building. At the moment, a technical background is a massive advantage for using Windsurf. As a rusty developer, it made me feel like I had superpowers.

But I believe within a couple of months, when things like log tailing and automated testing and native version control get implemented, it will be an extremely powerful tool for even non-technical people to write production-quality apps. The AI will be able to make complex changes and then verify those changes are actually working. At the moment, it feels like it's making a best guess at what will work and then leaving the user to test it. Implementing better feedback loops will enable a truly agentic, recursive, self-healing development flow. It doesn't feel like it needs any breakthrough in technology to enable this. It's just about adding a few tool calls to the existing LLMs. My mind races as I try to think through the implications for professional software developers.

Meanwhile, the LLMs aren't going to sit still. They're getting better at a frightening rate. I spoke to several very capable software engineers who are Y Combinator founders in the last week. About a quarter of them told me that 95% of their code is written by AI. In six or twelve months, I just don't think software engineering is going exist in the same way as it does today. The cost of creating high-quality, custom software is quickly trending towards zero.

You can try the site yourself at recipeninja.ai

Here's a complete list of functionality. Of course, Windsurf just generated this list for me 🫠

RecipeNinja: Comprehensive Functionality Overview

Core Concept: the app appears to be a cooking assistant application that provides voice-guided recipe instructions, allowing users to cook hands-free while following step-by-step recipe guidance.

Backend (Rails API) Functionality

User Authentication & Authorization

Google OAuth integration for user authentication

User account management with secure authentication flows

Authorization system ensuring users can only access their own private recipes or public recipes

Recipe Management

Recipe Model Features:

Unique public IDs (format: "r_" + 14 random alphanumeric characters) for security

User ownership (user_id field with NOT NULL constraint)

Public/private visibility toggle (default: private)

Comprehensive recipe data storage (title, ingredients, steps, cooking time, etc.)

Image attachment capability using Active Storage with S3 storage in production

Recipe Tagging System:

Many-to-many relationship between recipes and tags

Tag model with unique name attribute

RecipeTag join model for the relationship

Helper methods for adding/removing tags from recipes

Recipe API Endpoints:

CRUD operations for recipes

Pagination support with metadata (current_page, per_page, total_pages, total_count)

Default sorting by newest first (created_at DESC)

Filtering recipes by tags

Different serializers for list view (RecipeSummarySerializer) and detail view (RecipeSerializer)

Voice Generation

Voice Recording System:

VoiceRecording model linked to recipes

Integration with Eleven Labs API for text-to-speech conversion

Caching of voice recordings in S3 to reduce API calls

Unique identifiers combining recipe_id, step_id, and voice_id

Force regeneration option for refreshing recordings

Audio Processing:

Using streamio-ffmpeg gem for audio file analysis

Active Storage integration for audio file management

S3 storage for audio files in production

Recipe Import & Generation

RecipeImporter Service:

OpenAI integration for recipe generation

Conversion of text recipes into structured format

Parsing and normalization of recipe data

Import from photos functionality

Frontend (React) Functionality

User Interface Components

Recipe Selection & Browsing:

Recipe listing with pagination

Real-time updates with 10-second polling mechanism

Tag filtering functionality

Recipe cards showing summary information (without images)

"View Details" and "Start Cooking" buttons for each recipe

Recipe Detail View:

Complete recipe information display

Recipe image display

Tag display with clickable tags

Option to start cooking from this view

Cooking Experience:

Step-by-step recipe navigation

Voice guidance for each step

Keyboard shortcuts for hands-free control:

Arrow keys for step navigation

Space for play/pause audio

Escape to return to recipe selection

URL-based step tracking (e.g., /recipe/r_xlxG4bcTLs9jbM/classic-lasagna/steps/1)

State Management & Data Flow

Recipe Service:

API integration for fetching recipes

Support for pagination parameters

Tag-based filtering

Caching mechanisms for recipe data

Image URL handling for detailed views

Authentication Flow:

Google OAuth integration using environment variables

User session management

Authorization header management for API requests

Progressive Web App Features

PWA capabilities for installation on devices

Responsive design for various screen sizes

Favicon and app icon support

Deployment Architecture

Two-App Structure:

cook-voice-api: Rails backend on Heroku

cook-voice-wizard: React frontend/PWA on Heroku

Backend Infrastructure:

Ruby 3.2.2

PostgreSQL database (Heroku PostgreSQL addon)

Amazon S3 for file storage

Environment variables for configuration

Frontend Infrastructure:

React application

Environment variable configuration

Static buildpack on Heroku

SPA routing configuration

Security Measures:

HTTPS enforcement

Rails credentials system

Environment variables for sensitive information

Public ID system to mask database IDs

This comprehensive overview covers the major functionality of the Cook Voice application based on the available information. The application appears to be a sophisticated cooking assistant that combines recipe management with voice guidance to create a hands-free cooking experience.

2 notes

·

View notes

Text

youtube

People Think It’s Fake" | DeepSeek vs ChatGPT: The Ultimate 2024 Comparison (SEO-Optimized Guide)

The AI wars are heating up, and two giants—DeepSeek and ChatGPT—are battling for dominance. But why do so many users call DeepSeek "fake" while praising ChatGPT? Is it a myth, or is there truth to the claims? In this deep dive, we’ll uncover the facts, debunk myths, and reveal which AI truly reigns supreme. Plus, learn pro SEO tips to help this article outrank competitors on Google!

Chapters

00:00 Introduction - DeepSeek: China’s New AI Innovation

00:15 What is DeepSeek?

00:30 DeepSeek’s Impressive Statistics

00:50 Comparison: DeepSeek vs GPT-4

01:10 Technology Behind DeepSeek

01:30 Impact on AI, Finance, and Trading

01:50 DeepSeek’s Effect on Bitcoin & Trading

02:10 Future of AI with DeepSeek

02:25 Conclusion - The Future is Here!

Why Do People Call DeepSeek "Fake"? (The Truth Revealed)

The Language Barrier Myth

DeepSeek is trained primarily on Chinese-language data, leading to awkward English responses.

Example: A user asked, "Write a poem about New York," and DeepSeek referenced skyscrapers as "giant bamboo shoots."

SEO Keyword: "DeepSeek English accuracy."

Cultural Misunderstandings

DeepSeek’s humor, idioms, and examples cater to Chinese audiences. Global users find this confusing.

ChatGPT, trained on Western data, feels more "relatable" to English speakers.

Lack of Transparency

Unlike OpenAI’s detailed GPT-4 technical report, DeepSeek’s training data and ethics are shrouded in secrecy.

LSI Keyword: "DeepSeek data sources."

Viral "Fail" Videos

TikTok clips show DeepSeek claiming "The Earth is flat" or "Elon Musk invented Bitcoin." Most are outdated or edited—ChatGPT made similar errors in 2022!

DeepSeek vs ChatGPT: The Ultimate 2024 Comparison

1. Language & Creativity

ChatGPT: Wins for English content (blogs, scripts, code).

Strengths: Natural flow, humor, and cultural nuance.

Weakness: Overly cautious (e.g., refuses to write "controversial" topics).

DeepSeek: Best for Chinese markets (e.g., Baidu SEO, WeChat posts).

Strengths: Slang, idioms, and local trends.

Weakness: Struggles with Western metaphors.

SEO Tip: Use keywords like "Best AI for Chinese content" or "DeepSeek Baidu SEO."

2. Technical Abilities

Coding:

ChatGPT: Solves Python/JavaScript errors, writes clean code.

DeepSeek: Better at Alibaba Cloud APIs and Chinese frameworks.

Data Analysis:

Both handle spreadsheets, but DeepSeek integrates with Tencent Docs.

3. Pricing & Accessibility

FeatureDeepSeekChatGPTFree TierUnlimited basic queriesGPT-3.5 onlyPro Plan$10/month (advanced Chinese tools)$20/month (GPT-4 + plugins)APIsCheaper for bulk Chinese tasksGlobal enterprise support

SEO Keyword: "DeepSeek pricing 2024."

Debunking the "Fake AI" Myth: 3 Case Studies

Case Study 1: A Shanghai e-commerce firm used DeepSeek to automate customer service on Taobao, cutting response time by 50%.

Case Study 2: A U.S. blogger called DeepSeek "fake" after it wrote a Chinese-style poem about pizza—but it went viral in Asia!

Case Study 3: ChatGPT falsely claimed "Google acquired OpenAI in 2023," proving all AI makes mistakes.

How to Choose: DeepSeek or ChatGPT?

Pick ChatGPT if:

You need English content, coding help, or global trends.

You value brand recognition and transparency.

Pick DeepSeek if:

You target Chinese audiences or need cost-effective APIs.

You work with platforms like WeChat, Douyin, or Alibaba.

LSI Keyword: "DeepSeek for Chinese marketing."

SEO-Optimized FAQs (Voice Search Ready!)

"Is DeepSeek a scam?" No! It’s a legitimate AI optimized for Chinese-language tasks.

"Can DeepSeek replace ChatGPT?" For Chinese users, yes. For global content, stick with ChatGPT.

"Why does DeepSeek give weird answers?" Cultural gaps and training focus. Use it for specific niches, not general queries.

"Is DeepSeek safe to use?" Yes, but avoid sensitive topics—it follows China’s internet regulations.

Pro Tips to Boost Your Google Ranking

Sprinkle Keywords Naturally: Use "DeepSeek vs ChatGPT" 4–6 times.

Internal Linking: Link to related posts (e.g., "How to Use ChatGPT for SEO").

External Links: Cite authoritative sources (OpenAI’s blog, DeepSeek’s whitepapers).

Mobile Optimization: 60% of users read via phone—use short paragraphs.

Engagement Hooks: Ask readers to comment (e.g., "Which AI do you trust?").

Final Verdict: Why DeepSeek Isn’t Fake (But ChatGPT Isn’t Perfect)

The "fake" label stems from cultural bias and misinformation. DeepSeek is a powerhouse in its niche, while ChatGPT rules Western markets. For SEO success:

Target long-tail keywords like "Is DeepSeek good for Chinese SEO?"

Use schema markup for FAQs and comparisons.

Update content quarterly to stay ahead of AI updates.

🚀 Ready to Dominate Google? Share this article, leave a comment, and watch it climb to #1!

Follow for more AI vs AI battles—because in 2024, knowledge is power! 🔍

#ChatGPT alternatives#ChatGPT features#ChatGPT vs DeepSeek#DeepSeek AI review#DeepSeek vs OpenAI#Generative AI tools#chatbot performance#deepseek ai#future of nlp#deepseek vs chatgpt#deepseek#chatgpt#deepseek r1 vs chatgpt#chatgpt deepseek#deepseek r1#deepseek v3#deepseek china#deepseek r1 ai#deepseek ai model#china deepseek ai#deepseek vs o1#deepseek stock#deepseek r1 live#deepseek vs chatgpt hindi#what is deepseek#deepseek v2#deepseek kya hai#Youtube

2 notes

·

View notes

Text

sometimes I really wish C# had C/C++ style preprocessor macros. I know they have their issues, especially when it comes to accidental code obfuscation, but sometimes sometimes being able to use semantic similarities between two otherwise entirely different objects can be really handy.

like the API I'm working with has a bunch of various event classes that all use paired Subscribe and Unsubscribe methods, and I'd like to have it so every time I Subscribe to an event, that same event also gets added to a list to make sure it gets Unsubscribed later, but since most events calls use different types of arguments, the method calls are functionally too different for a simple generic solution.

If it were C/C++, I'd just be able to do #define SUBEVENT(ev, fun) ev.Subscribe(fun); unsubscribe.Add(() => { ev.Unsubscribe(fun); }); and call it a day, but since C# doesn't have the C preprocessor, it depends on me remembering to manually add each subscribed event to the unsubscribe list.

Feels like there's got to be a better way, but hells if I know what it'd be.

6 notes

·

View notes

Text

Crypto trading mobile app

Designing a Crypto Trading Mobile App involves a balance of usability, security, and aesthetic appeal, tailored to meet the needs of a fast-paced, data-driven audience. Below is an overview of key components and considerations to craft a seamless and user-centric experience for crypto traders.

Key Elements of a Crypto Trading Mobile App Design

1. Intuitive Onboarding

First Impressions: The onboarding process should be simple, guiding users smoothly from downloading the app to making their first trade.

Account Creation: Offer multiple sign-up options (email, phone number, Google/Apple login) and include KYC (Know Your Customer) verification seamlessly.

Interactive Tutorials: For new traders, provide interactive walkthroughs to explain key features like trading pairs, order placement, and wallet setup.

2. Dashboard & Home Screen

Clean Layout: Display an overview of the user's portfolio, including current balances, market trends, and quick access to popular trading pairs.

Market Overview: Real-time market data should be clearly visible. Include options for users to view coin performance, historical charts, and news snippets.

Customization: Let users customize their dashboard by adding favorite assets or widgets like price alerts, trading volumes, and news feeds.

3. Trading Interface

Simple vs. Advanced Modes: Provide two versions of the trading interface. A simple mode for beginners with basic buy/sell options, and an advanced mode with tools like limit orders, stop losses, and technical indicators.

Charting Tools: Integrate interactive, real-time charts powered by TradingView or similar APIs, allowing users to analyze market movements with tools like candlestick patterns, RSI, and moving averages.

Order Placement: Streamline the process of placing market, limit, and stop orders. Use clear buttons and a concise form layout to minimize errors.

Real-Time Data: Update market prices, balances, and order statuses in real-time. Include a status bar that shows successful or pending trades.

4. Wallet & Portfolio Management

Asset Overview: Provide an easy-to-read portfolio page where users can view all their holdings, including balances, performance (gains/losses), and allocation percentages.

Multi-Currency Support: Display a comprehensive list of supported cryptocurrencies. Enable users to transfer between wallets, send/receive assets, and generate QR codes for transactions.

Transaction History: Offer a detailed transaction history, including dates, amounts, and transaction IDs for transparency and record-keeping.

5. Security Features

Biometric Authentication: Use fingerprint, facial recognition, or PIN codes for secure logins and transaction confirmations.

Two-Factor Authentication (2FA): Strong security protocols like 2FA with Google Authenticator or SMS verification should be mandatory for withdrawals and sensitive actions.

Push Notifications for Security Alerts: Keep users informed about logins from new devices, suspicious activities, or price movements via push notifications.

6. User-Friendly Navigation

Bottom Navigation Bar: Include key sections like Home, Markets, Wallet, Trade, and Settings. The icons should be simple, recognizable, and easily accessible with one hand.

Search Bar: A prominent search feature to quickly locate specific coins, trading pairs, or help topics.

7. Analytics & Insights

Market Trends: Display comprehensive analytics including top gainers, losers, and market sentiment indicators.

Push Alerts for Price Movements: Offer customizable price alert notifications to help users react quickly to market changes.

Educational Content: Include sections with tips on technical analysis, crypto market basics, or new coin listings.

8. Social and Community Features

Live Chat: Provide a feature for users to chat with customer support or engage with other traders in a community setting.

News Feed: Integrate crypto news from trusted sources to keep users updated with the latest market-moving events.

9. Light and Dark Mode

Themes: Offer both light and dark mode to cater to users who trade at different times of day. The dark mode is especially important for night traders to reduce eye strain.

10. Settings and Customization

Personalization Options: Allow users to choose preferred currencies, set trading limits, and configure alerts based on their personal preferences.

Language and Regional Settings: Provide multilingual support and regional settings for global users.

Visual Design Considerations

Modern, Minimalist Design: A clean, minimal UI is essential for avoiding clutter, especially when dealing with complex data like market trends and charts.

Color Scheme: Use a professional color palette with accents for call-to-action buttons. Green and red are typically used for indicating gains and losses, respectively.

Animations & Micro-interactions: Subtle animations can enhance the experience by providing feedback on button presses or transitions between screens. However, keep these minimal to avoid slowing down performance.

Conclusion

Designing a crypto trading mobile app requires focusing on accessibility, performance, and security. By blending these elements with a modern, intuitive interface and robust features, your app can empower users to navigate the fast-paced world of crypto trading with confidence and ease.

#uxbridge#uxuidesign#ui ux development services#ux design services#ux research#ux tools#ui ux agency#ux#uxinspiration#ui ux development company#crypto#blockchain#defi#ethereum#altcoin#fintech

2 notes

·

View notes

Text

I'm no longer gonna be able to comfortably play my special interest, which fucking sucks. Rant under cut. It's about League of Legends and Riot's anti-cheat software, Vanguard, if you're interested.

Riot Vanguard (vgk) is a kernel-level software that scans all of a system's processes to detect cheat engines, which itself is fine - industry standard - except it boasts a particular effectiveness due to how it's run. Vgk runs on start-up so that it's running before a user has the chance to launch a cheat engine, and it can ID hardware so that if a player is caught cheating, they won't be able to play again on the same device. Unless it is disabled manually, it will run 24/7, whereas other AC software will start and stop in line with the game's execution.

It's more effective than other AC software, but it absolutely bricked some PCs back upon its initial release when Valorant dropped in 2020. This was a new game, fresh code, but the anti-cheat borders on a fucking rootkit - a term I'm using liberally, because vgk isn't malware, but it works in the same way on a systemic level. Equally as invasive, and can potentially be equally as destructive; one of those is intended, and the other is an unfortunate by-product of invasive software being developed by a video game company.

League of Legends is a 15 year old game with some pretty tragic code. If vgk caused people to bluescreen after exiting Valorant, then even more people are going to encounter issues with the shitshow that is LoL's code base.

Three weeks ago, an attempt to fix a bug regarding an in-client feature fucked over a far more significant API in several major servers. Every time a particular game-mode called "Clash" launches (every other month or so), it bricks the servers. This is currently a running gag in the community: that whenever the client acts up (embarrassingly often for such a well-funded game) Riot must be dropping Clash early. But when you introduce a bloody rootkit into the mix that runs in tandem with spaghetti code and beyond the closing of the game app, this is going to undoubtedly fuck some computers up.

I'm not someone who cheats at games, but I care about my system too much to risk this. Something that relies on the BIOS, that is known to have caused permanent damage to systems while running alongside a much better programmed game, that continuously scans your system while it's active (and always activates upon start-up until disabled) is obscenely risky. With a 24/7 invasive software, it can disable drivers regardless of what you're playing and when; the worst cases - plural - I've read about anecdotally were people's cooling systems being disabled erroneously by vgk, causing gpu melting. You bet any antivirus software you have installed is going to scan without pause because of it, which will cause more system-wide performance issues, too.

There's also the (albeit minor) risk of other scripts triggering the uh-oh alarm and leading to unfair account bans, and I've poured almost 7 years into this game. I mod some of my single-player games and write scripts. No thanks.

And while I'm lucky enough to have a decent system, the TPM 2.0 and secure boot requirement for Windows 11 users means that vgk will effectively - while the phrase is crude, I haven't seen a concise alternative - "class-ban" League players. Similar to the release of OW2, where a unique SIM was required for every account, including existing ones until that got changed after enough backlash - except buying a phone number is far cheaper than buying a laptop or PC. Even with the requirements, the performance issues will tank low-end systems, which would already be at higher risk of hardware fuckery from increased and extended cpu usage. And the game is currently designed to be comfortably playable on low-end rigs, so it will force-out a good number of players.

If you play League and intend to continue playing after vgk is made mandatory in Jan/Feb 2024, give it a few months after it goes live before you play. That's enough time for any catastrophic issues to unfold, because if the testing period was anything like it is for game features, it won't be sufficient, and the number of cases of system damage will be worse than it was for Valorant upon release.

This rant does read like I'm trying to dissuade people from playing post-vgk, and I'm not, but I am urging people to be cautious and informed on the legitimate controversies surrounding Vanguard, especially anything hardware related. Familiarise yourself with how the program works and assess whether your system will likely be affected, and how permanent any damage could be to your hardware. Read forums (that aren't moderated by Riot employees where possible) and verify the information you're reading. Including this. It's 3:30am and I'm writing this angrily, so my limited explanations of the software could stand to be more thorough.

I'm hoping that there will be enough of a reduction in League's ~200m monthly player-base to spark a reversal in the decision to implement vgk. Not out of consideration for people who don't want to install a rootkit for a video game, but because Riot would lose money and shit their corporate britches.

Having to say goodbye to my favourite game, a universe I love and one of the more significant outlets to socialisation in my life absolutely sucks, though. The actual season changes looked super cool too, and I was stoked about Ambessa coming to the game. I'll enjoy the game while I can, but yeah this feels like a bitter breakup lmao.

#enforcing a rootkit to ban a few xerath mains was not on my 2024 bingo card#i think i've encountered two cheaters in my 7 years of playing#rant post#league posting

8 notes

·

View notes

Text

Do You Want Some Cookies?

Doing the project-extrovert is being an interesting challenge. Since the scope of this project shrunk down a lot since the first idea, one of the main things I dropped is the use of a database, mostly to reduce any cost I would have with hosting one. So things like authentication needs to be fully client-side and/or client-stored. However, this is an application that doesn't rely on JavaScript, so how I can store in the client without it? Well, do you want some cookies?

Why Cookies

I never actually used cookies in one of my projects before, mostly because all of them used JavaScript (and a JS framework), so I could just store everything using the Web Storage API (mainly localstorage). But now, everything is server-driven, and any JavaScript that I will add to this project, is to enhance the experience, and shouldn't be necessary to use the application. So the only way to store something in the client, using the server, are Cookies.

TL;DR Of How Cookies Work

A cookie, in some sense or another, is just an HTTP Header that is sent every time the browser/client makes a request to the server. The server sends a Set-Cookie header on the first response, containing the value and optional "rules" for the cookie(s), which then the browser stores locally. After the cookie(s) is stored in the browser, on every subsequent request to the server, a Cookie header will be sent together, which then the server can read the values from.

Pretty much all websites use cookies some way or another, they're one of the first implementations of state/storage on the web, and every browser supports them pretty much. Also, fun note, because it was one of the first ways to know what user is accessing the website, it was also heavy abused by companies to track you on any website, the term "third-party cookie" comes from the fact that a cookie, without the proper rules or browser protection, can be [in summary] read from any server that the current websites calls. So things like advertising networks can set cookies on your browser to know and track your profile on the internet, without you even knowing or acknowledging. Nowadays, there are some regulations, primarily in Europe with the General Data Privacy Regulation (GDPR), that's why nowadays you always see the "We use Cookies" pop-up in websites you visit, which I beg you to actually click "Decline" or "More options" and remove any cookie labeled "Non-essential".

Small Challenges and Workarounds

But returning to the topic, using this simple standard wasn't so easy as I thought. The code itself isn't that difficult, and thankfully Go has an incredible standard library for handling HTTP requests and responses. The most difficult part was working around limitations and some security concerns.

Cookie Limitations

The main limitation that I stumbled was trying to have structured data in a cookie. JSON is pretty much the standard for storing and transferring structured data on the web, so that was my first go-to. However, as you may know, cookies can't use any of these characters: ( ) < > @ , ; : \ " / [ ] ? = { }. And well, when a JSON file looks {"like":"this"}, you can think that using JSON is pretty much impossible. Go's http.SetCookie function automatically strips " from the cookie's value, and the other characters can go in the Set-Cookie header, but can cause problems.

On my first try, I just noticed about the stripping of the " character (and not the other characters), so I needed to find a workaround. And after some thinking, I started to try implementing my own data structure format, I'm learning Go, and this could be an opportunity to also understand how Go's JSON parsing and how mostly struct tags works and try to implement something similar.

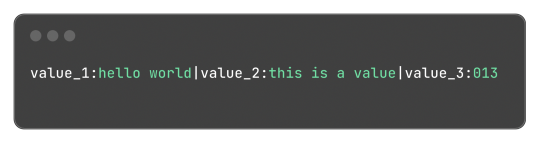

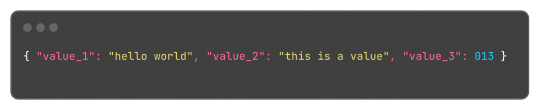

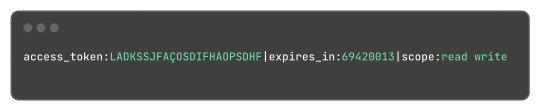

My idea was to make something similar to JSON in one way or another, and I ended up with:

Which, for reference, in JSON would be:

This format is something very easy to implement, just using strings.Split does most of the job of extracting the values and strings.Join to "encode" the values back. Yes, this isn't a "production ready" format or anything like that, but it is hacky and just a small fix for small amounts of structured data.

Go's Struct Tags

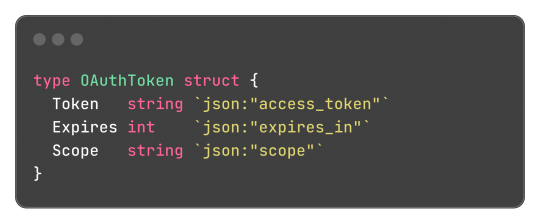

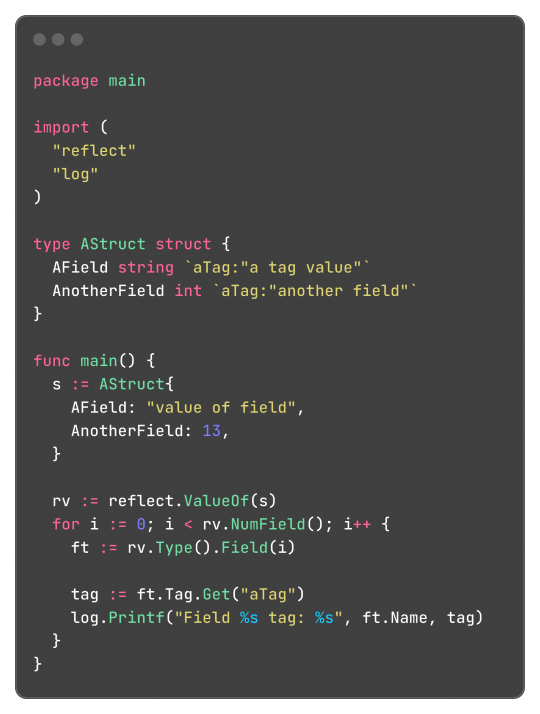

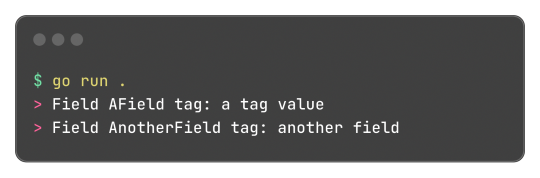

Go has an interesting and, to be honest, very clever feature called Struct Tags, which are a simple way to add metadata to Structs. They are simple strings that are added to each field and can contain key-value data:

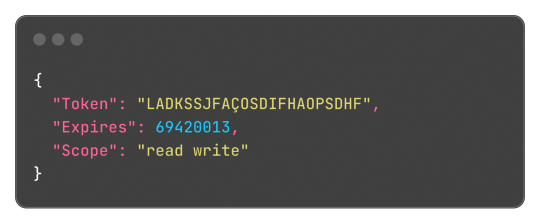

Said metadata can be used by things such the encoding/json package to transform said struct into a JSON object with the correct field names:

Without said tags, the output JSON would be:

This works both for encoding and decoding the data, so the package can correctly map the JSON field "access_token" to the struct field "Token".

And well, these tokens aren't limited or some sort of special syntax, any key-value pair can be added and accessed by the reflect package, something like this:

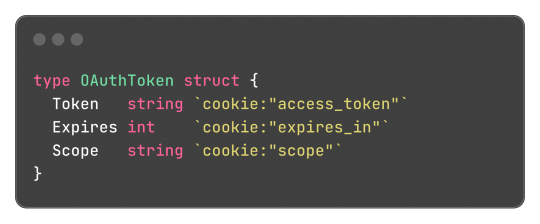

Learning this feature and the reflect package itself, empowered me to do a very simple encoding and decoding of the format where:

Can be transformed into:

And that's what I did, and the [basic] implementation source code just has 150 lines of code, not counting the test file to be sure it worked. It works, and now I can store structured data in cookies.

Legacy in Less Than 3 Weeks

And today, I found that I can just use url.PathEscape, and it escapes all ( ) < > @ , ; : \ " / [ ] ? = { } characters, so it can be used both in URLs and, surprise, cookie values. Not only that, but something like base64.URLEncoding would also work just fine. You live, and you learn y'know, that's what I love about engineering.

Security Concerns and Refactoring Everything

Another thing that was a limitation and mostly worry about me, is storing access tokens on cookies. A cookie by default isn't that secure, and can be easily accessed by JavaScript and browser extensions, there are ways to block and secure cookies, but even then, you can just open the developer tools of the browser and see them easily. Even though the only way to something malicious end up happening with these tokens are if the actual client end up being compromised, which means the user has bigger problems than just a social media token being leaked, it's better to try preventing these issues nonetheless (and learn something new as always).

The encryption and decryption part isn't so difficult, Go already provides packages for encryption under the crypto module. So I just implemented an encryption that cyphers a string based on a key environment variable, which I will change every month or so to improve security even more.

Doing this encryption on every endpoint would be repetitive, so adding a middleware would be a solution. I already made a small abstraction over the default Go's router (the DefaultMuxServer struct), which I'm going to be honest, wasn't the best abstraction, since it deviated a lot from Go's default HTTP package conventions. This deviation also would difficult the implementation of a generic middleware that I could use in any route or even any function that handles HTTP requests, a refactor was needed. Refactoring made me end up rewriting a lot of code and simplifying a lot of the code from the project. All routes now are structs that implement the http.Handler interface, so I can use them outside the application router and test them if needed; The router ends up being just a helper for having all routes in a struct, instead of multiple mux.HandleFunc calls in a function, and also handles adding middlewares to all routes; Middlewares end up being just a struct that can return a wrapped HandlerFunc function, which the router calls using a custom/wrapped implementation of the http.ResponseWriter interface, so middlewares can actually modify the content and headers of the response. The refactor had 1148 lines added, and 524 removed, and simplified a lot of the code.

For the encryption middleware, it encrypts all cookie values that are set in the Set-Cookie header, and decrypts any incoming cookie. Also, the encrypted result is encoded to base64, so it can safely be set in the Set-Cookie header after being cyphered.

---

And that's what I worked in around these last three days, today being the one where I actually used all this functionality and actually implemented the OAuth2 process, using an interface and a default implementation that I can easily reimplement for some special cases like Mastodon's OAuth process (since the token and OAuth application needs to be created on each instance separately). It's being interesting learning Go and trying to be more effective and implement things the way the language wants. Everything is being very simple nonetheless, just needing to align my mind with the language mostly.

It has been a while since I wrote one of these long posts, and I remembered why, it takes hours to do, but it's worth the work I would say. Unfortunately I can't write these every day, but hopefully they will become more common, so I can log better the process of working on the projects. Also, for the 2 persons that read this blog, give me some feedback! I really would like to know if there's anything I could improve in the writing, anything that ended up being confusing, or even how I could write the image description for the code snippets, I'm not sure how to make them more accessible for screen reader users.

Nevertheless, completing this project will also help to make these post, since the conversion for Markdown to Tumblr's NPF in the web editor sucks ass, and I know I can do it better.

2 notes

·

View notes

Text

Critical Vulnerability (CVE-2024-37032) in Ollama

Researchers have discovered a critical vulnerability in Ollama, a widely used open-source project for running Large Language Models (LLMs). The flaw, dubbed "Probllama" and tracked as CVE-2024-37032, could potentially lead to remote code execution, putting thousands of users at risk.

What is Ollama?

Ollama has gained popularity among AI enthusiasts and developers for its ability to perform inference with compatible neural networks, including Meta's Llama family, Microsoft's Phi clan, and models from Mistral. The software can be used via a command line or through a REST API, making it versatile for various applications. With hundreds of thousands of monthly pulls on Docker Hub, Ollama's widespread adoption underscores the potential impact of this vulnerability.

The Nature of the Vulnerability

The Wiz Research team, led by Sagi Tzadik, uncovered the flaw, which stems from insufficient validation on the server side of Ollama's REST API. An attacker could exploit this vulnerability by sending a specially crafted HTTP request to the Ollama API server. The risk is particularly high in Docker installations, where the API server is often publicly exposed. Technical Details of the Exploit The vulnerability specifically affects the `/api/pull` endpoint, which allows users to download models from the Ollama registry and private registries. Researchers found that when pulling a model from a private registry, it's possible to supply a malicious manifest file containing a path traversal payload in the digest field. This payload can be used to: - Corrupt files on the system - Achieve arbitrary file read - Execute remote code, potentially hijacking the system The issue is particularly severe in Docker installations, where the server runs with root privileges and listens on 0.0.0.0 by default, enabling remote exploitation. As of June 10, despite a patched version being available for over a month, more than 1,000 vulnerable Ollama server instances remained exposed to the internet.

Mitigation Strategies

To protect AI applications using Ollama, users should: - Update instances to version 0.1.34 or newer immediately - Implement authentication measures, such as using a reverse proxy, as Ollama doesn't inherently support authentication - Avoid exposing installations to the internet - Place servers behind firewalls and only allow authorized internal applications and users to access them

Broader Implications for AI and Cybersecurity